The role of fiscal policy

Patrick Minford is Professor of Applied Economics at Cardiff University

Recently estimated models of major economies, which have been extended to allow for banking, the zero lower bound on interest rates (ZLB), and varying pricing strategies, can account well for recent macroeconomic behaviour.

These models imply that active fiscal policy can contribute to macroeconomic stability and welfare by reducing the frequency of hitting the ZLB. Fiscal policy can also share the stabilisation role with monetary policy, whose effectiveness under the ZLB is much reduced.

1. Introduction: recent empirical evaluations of macro models and the implications for macro policy

Recent decades have seen a major financial crisis and a worldwide pandemic, together with large-scale responses from fiscal and monetary policy. A variety of attempts have been made to model these events and policy responses empirically.

In this piece I review these modelling attempts and suggest some policy conclusions. I will argue that a new class of models in which there is price-setting but for varying lengths of time can account for the shifts in macro behaviour from pre-crisis times up to the present day; these models also prescribe a key role for fiscal policy – ie. the deliberate use of public sector surplus or deficit – in stabilising the economy and preventing its slide into the zero lower bound.

This implies that fiscal rules should limit debt in the long term and not stymie fiscal policy, as happens with many short term fiscal rules now in use, such as in the UK and the EU.

Since the crisis, a number of economists have argued for a more central role for fiscal policy, given the enfeeblement of monetary policy with interest rates at the zero lower bound (ZLB). Prominent advocates of stronger fiscal stimulus for economies battling low inflation and weak demand have included Romer, Stiglitz, and Solow in Blanchard et al (2012); also Spilimbergo et al (2008), Lane (2010), though with opposition from Alesina and Giavazzi (2013).

This viewpoint has seemed highly persuasive on broad qualitative grounds. However, credible quantitative assessments of the role and effects of fiscal policy have been harder to find. This is what I attempt to do in this article, drawing on recent models that can claim to match data behaviour rather accurately.

2. Models and their empirical evaluation

In the past three decades, since the rational expectations revolution and the understanding of how ubiquitous were its implications, economists have rebuilt macro-economic models to ensure that they had good micro-foundations – that is to say, their assumptions were based on the actions of households and firms to meet their objectives, using rational expectations (ie. evaluating their prospects using available information intelligently).

These models assume simplified set-ups where consumers maximise stylised utility functions and firms maximise stylised profit functions. Most models assume representative agents; more recently they assume heterogeneous agents to deal with such issues as inequality and growth.

Much effort has been devoted to making these set-ups as realistic as possible and calibrating the resulting models with parameters that have been estimated on micro datasets. The models are usually labelled as ‘Dynamic Stochastic General Equilibrium’ (DSGE) models since they aim to capture how shocks affect people’s dynamic behaviour in market equilibrium.

Sometimes it has seemed as if the economists creating these models have assumed this ‘micro realism’ was enough to create a good macro model; and that therefore we should treat their models as simulating the true behaviour of the economy.

However, a moment’s reflection reveals such assumptions to be self-deluding. Even the most realistic set-ups require bold simplifications simply to be tractable; they are after all models and not the ‘real world’. Furthermore, these models are intended to capture aggregate behaviour and there is a great distance between aggregated behaviour and the micro behaviour of individuals; even heterogeneous agent models do not accurately span the variety of individual types and shock distributions.

The reasons for this gap between aggregated behaviour and the micro behaviour of individuals are manifold. One is the fairly obvious one that aggregate actions are the weighted sum of individual actions, yet we cannot be sure of the weights, which themselves may change over time and across different shocks.

Effectively we choose one constant set of weights but we need to check its accuracy. Another less obvious but important reason is that there are a host of ancillary market institutions whose function is to improve the effectiveness of individual strategies by sharing information; these include investment funds, banks and a variety of other financial intermediaries, whose activities are not usually modelled separately but whose contribution is found in the higher efficiency of those strategies.

Hence empirical work is needed to check whether these models do capture macroeconomic behaviour. It would be reassuring if well micro-founded models mimicked actual data behaviour. Then we would know that the simplification is not excessive and the aggregation problems have been conquered.

More broadly macro-economic modelling remains highly controversial even among ‘mainstream’ macroeconomists on empirical grounds: for example, Romer (2016) has argued that these DSGE models are useless for basing advice to policymakers because they fail to capture key aspects of macro behaviour.

To settle such debates we need a tough empirical testing strategy, with strong power to discriminate between models that fit the data behaviour and those that do not. The merits of different testing methods have been reviewed in Le et al (2016) and Meenagh et al (2019, 2023).

In this paper we review what we know about the empirical success of different models. We restrict ourselves to DSGE models because these are the only causal macro models we have that satisfy the requirements that people obey rational expectations.

We consider the results of empirical tests for DSGE models of the economy. Inevitably, given its size and influence, our main focus is on models of the US economy. However, we also review results for other large economies, viewed similarly as large and effectively closed.

We also review models of various open economies, such as the UK and regions of the Eurozone. What we will see is a general tendency for fiscal policy to make an important stabilising contribution according to these models.

We need a testing method that has enough power to discriminate between the models that succeed and the models that should be discarded. The method we propose is ‘Indirect Inference’ where a model is simulated in repeated samples created from historical shocks by a random selection process known as ‘bootstrapping’; this simulated behaviour is then compared with the actual behaviour we observe in the historical data, to see how closely it matches it – the model is rejected if the match is poor.

The procedure first finds a suitable way to describe the data behaviour; suitable in the sense that this behaviour is relevant to and revealing of the model’s accuracy. Usually we will use the ‘time-series’ behaviour of the data, that is a relationship between the data and its lagged values.

The most general such relation is a ‘Vector Auto Regression’, or VAR, where all the data variables are related to the past values of all of them. In practice we generally use three such variables, such as output, inflation and interest rates which are central to the economy’s behaviour.

As explained in Le et al (2016) and Meenagh et al (2019, 2023) cited above, the power of this test is extremely high, and for this reason the test needs to be used at a suitable level of power where it is efficiently traded off against tractability. Too much power will mean the rejection of all good models; while weak power gives much too wide bounds on the accuracy of the model which is what we want to assess.

In using these tests, we have found that a three-variable VAR is about right for getting the right amount of power for the test. We use this in the tests we report in what follows.

We have found that DSGE models based on New Keynesian principles extended to allow for banking, the ZLB and varying price duration can account well for recent macro behaviour across a variety of economies, whether large and approximately closed like the US or small and open like the UK

3. DSGE models of the US economy

The most widely used DSGE model today is the New Keynesian model of the US constructed by Christiano, Eichenbaum and Evans (2005) and estimated by Bayesian methods by Smets and Wouters (2007). This model and the US data it is focused on makes a good starting point for our model evaluations.

In this model the US is treated as a closed continental economy. In essence it is a standard model of general market equilibrium (ie. where all markets clear) but with the addition of sticky wages and prices so that there is scope for monetary policy feedback to affect the real economy- this last being the ‘New Keynesian’ addition. Smets and Wouters found that their estimated model passed some forecasting accuracy tests when compared to unrestricted VAR models.

Le et al (2011) applied indirect inference testing to the Smets-Wouters model, first investigating their New Keynesian version and then also investigating a New Classical version with no rigidity. They rejected both on the full post-war sample used by Smets and Wouters, using a three-variable VAR1 (output, inflation and interest rates, with only one lag). They concluded that this model of the US post-war economy, popular as it was in major policy circles, must be regarded as strongly rejected by the appropriate 3-variable test.

They then found that there were two highly significant break points in the sample, in the mid-1960s and the mid-1980s. They also argued that there are parts of the economy where prices and wages are flexible and it therefore should improve the match to the data if this is included in a ‘hybrid’ model that recognises the existence of sectors with differing price rigidity (Dixon and Kara, 2011, is similar, with disaggregation).

Finally, after estimation by indirect inference they found a version of this hybrid model that matched the data from the mid-1980s until 2004, known as ‘the great moderation’; no such version (or any version) could match the earlier two sub-samples. The later sample showed very low shares for the ‘flexible sectors’.

However, when it was extended to include the period of financial crisis up to 2012, these shares rose dramatically and became dominant. The high rigidity of the great moderation period seems to have reflected the lack of large shocks and the low inflation rate of that period; once the shocks of the financial crisis hit, with sharp effects on inflation, this ‘rigidity’ mostly disappears. Nevertheless there is normally some rigidity.

A DSGE model in which rigidity is shock-size-dependent is non-linear. We have the tools to solve such models. Since the financial crisis there has also been the arrival of the zero bound on interest rates and the use of Quantitative Easing (QE, aggressive purchase of bonds for money by the central bank) under the zero bound.

Le et al (2021) estimated such a model, complete with a banking sector and a collateral constraint that made narrow money creation effective by cheapening collateral. They found that this model finally could match the data behaviour over the whole post-war sample; in effect the shifts in regime due to the interaction of the ZLB with inflation and so with the extent of price rigidity manage to mimic the changing data behaviour closely.

However, they found that this interaction of the ZLB and price rigidity created considerable inflation variability, as the ZLB weakened the stabilising power of monetary policy on prices and this extra inflation variance in turn reduced price rigidity, further feeding inflation variance.

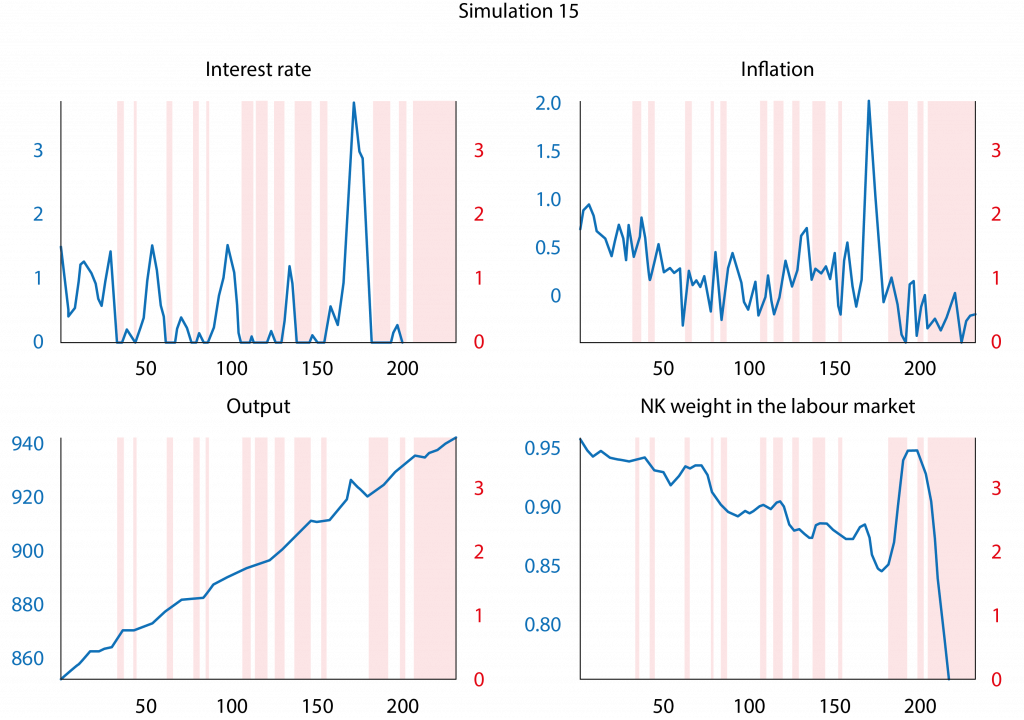

This process is illustrated in Figure 1, a simulation (no 15) of the model in which the ZLB is repeatedly hit (the shaded areas), with both inflation and interest rates gyrating sharply, and both output and the share of the relatively rigid-price sector (the NK weight) responding.

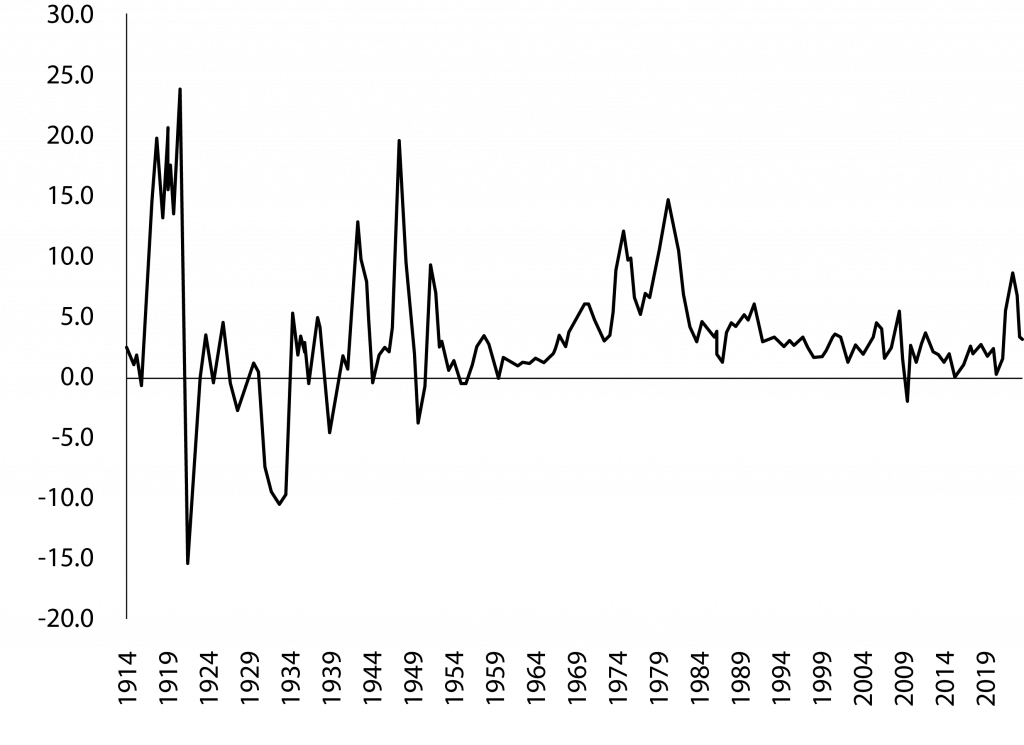

In this prediction of soaring inflation variance after the onset of the zero bound, this model has proved eerily correct – as the chart in Figure 2 of US inflation testifies. After going negative in 2010 and then settling at low rates initially in the 2010s, in 2023 inflation leapt upwards in a way reminiscent of the 1970s, in turn forcefully ending the ZLB with the sharp interest rate response currently playing out.

Figure 1. Bootstrap simulation (all shocks) of US model

Source: Le, Meenagh and Minford (2021).

Figure 2. US inflation for all urban consumers

Source: St Louis Fed

To cut into this inflation variance feedback loop, Le et al (2021) found that there were benefits from both new monetary rules and from stronger fiscal feedback rules. Specifically, they found that substituting a Price Level (or Nominal GDP – NOMGDPT) target for an inflation target in the interest-rate-setting rule could greatly increase stability – because a levels target requires much more persistent interest rate changes which are anticipated by agents, thus giving much more ‘forward guidance’.

They further found that fiscal policy has an important role to play in keeping the economy away from the ZLB; with a strongly stabilising fiscal policy that acts directly to prevent the ZLB occurring they found a big increase in both output and inflation stability.

4. Work on other economies

Work on the UK found that a similar model fitted UK data behaviour before and after the financial crisis, from 1986 to 2016 (Le et al 2023a). Like the US model, it implies that fiscal policy can contribute to stability by limiting zero bound episodes.

For the eurozone, in a model that divided the zone into two separate regions, North and South, Minford et al (2022) found that it matched eurozone data well over the first two decades of the euro’s existence; they modelled the zero bound indirectly by assuming the central bank rule targets the commercial credit rate with its repertoire of instruments, including QE. As in the other models just reviewed fiscal policy can increase stability substantially.

Similar results are found for Japan – Le et al (2023b). Growth in Japan has been notoriously weak, even though monetary policy has been stimulative for several decades. Fiscal policy has been intermittently stimulative between contractionary episodes where consumption taxes were raised; the simulation results show that a fiscal rule consistently exerting countercyclical pressure would have stabilised output more around a rising trend.

5. Conclusions

In this review of the recent empirical evidence on macro modelling, we have found that DSGE models based on New Keynesian principles extended to allow for banking, the ZLB and varying price duration can account well for recent macro behaviour across a variety of economies, whether large and approximately closed like the US or small and open like the UK.

Related models can also account for macro behaviour in Japan and the eurozone. These models all find that a contribution from active fiscal policy increases macro stability and welfare, essentially by reducing the frequency of hitting the ZLB, and sharing the stabilisation role with monetary policy whose effectiveness under the ZLB is much reduced.

All this implies that fiscal rules must allow fiscal policy to operate, instead of preventing it as UK and EU rules currently do. These rules must be refocused on long term solvency.

References

Alesina, A and Giavazzi, F (2013), editors “Fiscal policy after the financial crisis”, Chicago University Press, ix + 585 pp.

Blanchard, O, Romer, D, Spence, M, and Stiglitz, J (2012), editors, “In the wake of the crisis”, MIT Press, pp. 239.

Christiano, L, Eichenbaum, M, Evans, C, (2005). ‘Nominal Rigidities and the Dynamic Effects of a Shock to Monetary Policy’. Journal of Political Economy 113, 1-45.

Dixon, H, and Kara, E (2011) “Contract length heterogeneity and the persistence of monetary shocks in a dynamic generalized Taylor economy”, European Economic Review, 2011, vol. 55, issue 2, 280-292.

Lane, P (2010) “Some Lessons for Fiscal Policy from the Financial Crisis”, IIIS Discussion Paper No. 334, IIIS, Trinity College Dublin and CEPR

Le, VPM, Meenagh, D, Minford, P, and Wickens, M (2011). “How much nominal rigidity is there in the US economy – testing a New Keynesian model using indirect inference”, Journal of Economic Dynamics and Control 35(12), 2078-2104.

Le, VPM, Meenagh, D, Minford, P, Wickens, M and Xu, Y (2016) “Testing Macro Models by Indirect Inference: A Survey for Users”, Open Economies Review, 2016, 27(1), 1-38.

Le, VPM, Meenagh, D and Minford. P (2021) “State-dependent pricing turns money into a two-edged sword: A new role for monetary policy”, Journal of International Money and Finance, 2021, vol. 119, issue C.

Le, VPM, Meenagh, D, Minford, P, and Wang, Z (2023a) “UK monetary and fiscal policy since the Great Recession- an evaluation”, Cardiff University Economics working paper, E2023/9, http://carbsecon.com/wp/E2023_9.pdf.

Le, VPM, Meenagh, D, and Minford, P (2023b)’Could an economy get stuck in a rational pessimism bubble? The case of Japan,’ Cardiff Economics Working Paper, 2023/13, from Cardiff University, Cardiff Business School, Economics Section.

Meenagh, D, Minford, P, Wickens, M and Xu, Y (2019) “Testing DSGE Models by indirect inference: a survey of recent findings”, Open Economies Review, 2019, vol. 30, issue 3, No 8, 593-620.

Meenagh, D, Minford, P, and Xu, Y (2023) “Indirect inference and small sample bias – some recent results”, Cardiff Economics Working Paper, 2023/15, from Cardiff University, Cardiff Business School, Economics Section.

Minford, P, Ou, Z, Wickens, M, and Zhu, Z (2022) “The eurozone: What is to be done to maintain macro and Önancial stability?”, Journal of Financial Stability, 2022, vol. 63, issue C.

Smets, F, Wouters, R, (2007) “Shocks and Frictions in US Business Cycles: A Bayesian DSGE Approach”. American Economic Review 97, 586-606.

Spilimbergo, A, Symansky, S, Blanchard, O, and Cottarelli, C (2008) “Fiscal Policy for the Crisis”, a note prepared by the Fiscal Affairs and Research Departments, IMF, December 29, 2008.