The impact of AI on the nature and quality of jobs

Laura Nurski is a Research Fellow and Mia Hoffmann a Research Analyst, at Bruegel

Summary

Artificial intelligence (AI), like any workplace technology, changes the division of labour in an organisation and the resulting design of jobs. When used as an automation technology, AI changes the bundle of tasks that make up an occupation. In this case, implications for job quality depend on the (re)composition of those tasks. When AI automates management tasks, known as algorithmic management, the consequences extend into workers’ control over their work, with impacts on their autonomy, skill use and workload.

We identify four use cases of algorithmic management that impact the design and quality of jobs: algorithmic work-method instructions; algorithmic scheduling of shifts and tasks; algorithmic surveillance, evaluation and discipline; and algorithmic coordination across tasks.

Reviewing the existing empirical evidence on automation and algorithmic management shows significant impact on job quality across a wide range of jobs and employment settings. While each AI use case has its own particular effects on job demands and resources, the effects tend to be more negative for the more prescriptive (as opposed to supportive) use cases. These changes in job design demonstrably affect the social and physical environment of work and put pressure on contractual employment conditions as well.

As technology development is a product of power in organisations, it replicates existing power dynamics in society. Consequently, disadvantaged groups suffer more of the negative consequences of AI, risking further job-quality polarisation across socioeconomic groups.

Meaningful worker participation in the adoption of workplace AI is critical to mitigate the potentially negative effects of AI adoption on workers, and can help achieve fair and transparent AI systems with human oversight. Policymakers should strengthen the role of social partners in the adoption of AI technology to protect workers’ bargaining power.

1 Some definitions: what is AI and what is job quality?

What is artificial intelligence?

The European Commission’s High-Level Independent Expert Group on AI (AI HLEG, 2019) defined1 artificial intelligence as “software (and possibly also hardware) systems, designed by humans that, given a complex goal, act in the physical or digital dimension by perceiving their environment through data acquisition, interpreting the collected structured or unstructured data, reasoning on the knowledge, or processing the information derived from this data and deciding the best action(s) to take to achieve the given goal.”

Figure 1. Definition of AI and smart technologies

Source: Bruegel based on AI HLEG (2019).

The same smart product can have different effects on workers, depending on its application in the organisation. Head-worn devices, for example, can be used for different applications (giving instructions, visualising information, providing remote access or support) that affect different elements of job quality (autonomy and skill discretion, social support, task complexity, physical and cognitive workload) (Bal et al 2021).

The specific work setting and organisational context play key roles in determining the effects on jobs. If the head-worn technology supports the worker by providing information and supporting decentralised decision-making, it increases worker autonomy and skill discretion.

However, if the real-time connection is used to monitor the worker, prescribe work tasks and take over decision-making, then the worker loses autonomy. It is therefore necessary to further detail the specific use case or organisational application of the AI system, which we do in section 3.

First, it is helpful to understand what job quality is and how it is shaped in organisations.

What is job quality?

A good quality job (Nurski and Hoffmann 2022), is one that entails:

-Meeting people’s material, physical, emotional and cognitive needs from work through: job content that is balanced in the demands it places on workers and the resources it offers them to cope with those demands, in its physical, emotional and cognitive aspects; supportive and constructive social relationships with managers and co-workers; fair contractual employment conditions in terms of minimum wages, working time and job security; safe and healthy physical working conditions.

-Contributing to positive worker wellbeing: subjectively, in terms of engagement, commitment and meaningfulness; and objectively, in terms of material welfare and physical and mental health.

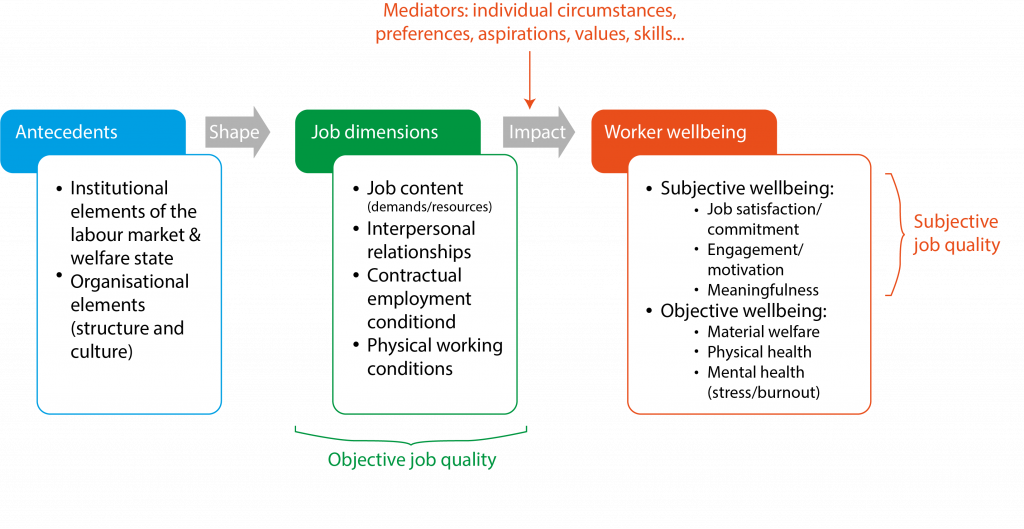

Figure 2. Integrative definition of job quality

Source: Nurski and Hoffmann (2022).

The analysis of job quality must separate three conceptual levels: antecedents measured at a level higher than the job (ie. the firm, labour market or welfare state), job dimensions measured at the level of the job, and worker wellbeing measured at the level of the individual holding the job (see Figure 2).

Out of the four job dimensions, job content, is of particular importance as it is the main predictor of worker outcomes in terms of behaviour, attitude and wellbeing (Humphrey et al 2007). Common indicators of job demands include work intensity and emotional load, while job resources include autonomy, social support and feedback.

2 Organisational antecedents to job quality

While institutional elements of the labour market (including working time regulation and minimum wages) can put boundaries on some dimensions of job quality, most of its elements are shaped inside the organisation.

Job content originates from the division of labour in the organisation

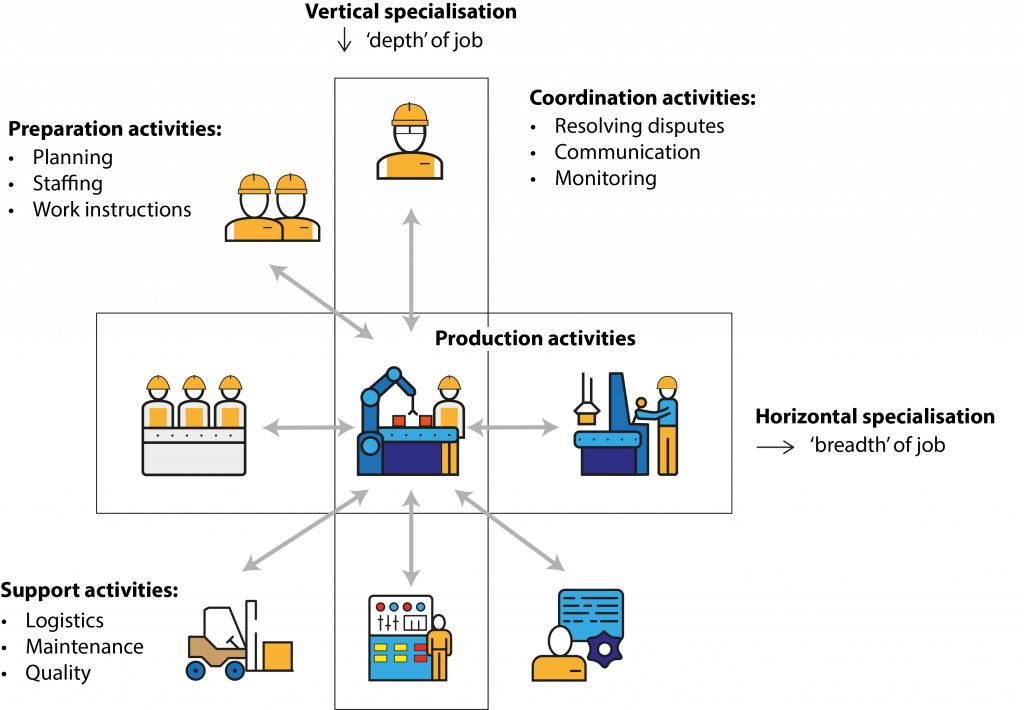

The set of tasks and decisions that make up the content of a job is the result of a division of labour within an organisation. Organisations consist of both production and governance activities (Williamson, 1981).

Production activities contribute directly to transforming an input to an output, while governance includes preparation, coordination and support activities (Delarue 2009). The division of labour across these production and governance activities results in an organisational structure made up of a horizontal production structure and a vertical governance structure (de Sitter 1998).

The amount of horizontal specialisation in production activities determines the ‘breadth’ of a job: if a worker only executes one highly specialised task repeatedly, she has a narrow job, while if she executes a variety of production tasks, she has a wide job.

The ‘depth’ of a job, on the other hand, is determined by the amount of vertical specialisation in terms of the control over the work. If a worker controls aspects of her work such as quality control, planning, maintenance or method specification, then she has a deep job, while if she only executes her tasks without having any control over the how, when and why, then she has a shallow job (Mintzberg, 1979, chapter 4).

Figure 3. Job content originates from the division of labour in the organisation

Source: Bruegel based on Mintzberg (1979) and Delarue (2009).

The link between job depth and breadth on the one hand and job quality on the other is established in the job demands-control model (Karasek 1979), extended in the job demands-resources model (Demerouti et al 2001), detailed in Ramioul and Van Hootegem (2015).

Too-narrow job design (ie. too much horizontal fragmentation) might increase job demands through highly repetitive, short-cyclical labour. Too-broad job design and too much task variation on the other hand might cause overload, conflicting demands and role ambiguity.

Similarly, too-shallow job design (ie. too much vertical fragmentation) means that workers lack the decision latitude to resolve issues and disruptions. Too-deep job design however might put an undue burden of excessive decision-making on workers (Kubicek et al 2017).

Good quality job design thus provides a manageable balance between job demands on workers and the job resources provided. While too-wide or deep job design might cause stress and overload for the worker (especially when she has more demands than resources), too-narrow or shallow job design is likely to lead to underutilisation and low meaningfulness of work.

Job content determines the other dimensions of job quality

Once job content is designed through vertical and horizontal specialisation, it further determines each of the other job dimensions. Indeed, while the four job quality dimensions listed in Figure 2 are conceptually different and independent of each other, empirically they tend to move together.

Latent class analysis on a survey of 26,000 European workers shows that clusters of jobs can be found that have similar characteristics along these dimensions (Eurofound 2017). Not unsurprisingly, these clusters can be identified along the lines of occupations and sectors and therefore job quality differences can mostly be explained by occupation and sector (Eurofound 2021).

The link between job content and contractual conditions is straightforward and often formalised by linking job titles and job descriptions with salary structures and pay grades. In the economic literature, it is also generally accepted that the nature of tasks within a job directly determine how much a job will be rewarded.

More complex tasks that require more advanced or a greater variety of skills receive higher wages (Autor and Handel 2013). There is also a direct link to working-time quality, as tasks in a continuous process (either production or services) will require at least some people to work in shifts or weekends.

Policymakers and employers need to look beyond working conditions into job design (job demands and resources) to understand the full impact of AI on job quality

Similarly, job content determines directly the physical environment of a worker and any exposure to hazardous ambient conditions or safety risks. When a worker performs cognitive tasks, her physical environment is likely an office with a desk and a computer and health risks are mostly ergonomic.

When a worker performs manual production tasks, her environment is likely a factory or resource extraction facility, and physical health and safety risks are most prominent. When a worker does in-person care or service work, her environment likely consist of a school, shop or care institution, and health and safety risks mostly stem from interpersonal interactions such as workplace violence.

Finally, perhaps less obvious at first sight, job design determines the social environment of the workplace. By allocating tasks and decisions to specific jobs, interdependencies between tasks held by different people require coordination between those people.

When this coordination is handled badly, misunderstanding and frustration is created between people, both horizontally (between colleagues) and vertically (between management and workers), potentially undermining constructive professional relationships and emotional support.

On the other hand, well-managed interdependencies between colleagues and optimally designed teams offer the opportunity for the development of a positive social environment.

3 Typology of AI use cases in the functions of the organisation

Adoption of AI in the workplace changes the abovementioned division of labour in the production and governance process – and therefore the resulting job design.

Applying AI in the production process is called ‘automation’ and is defined as “the development and adoption of new technologies that enable capital to be substituted for labor in a range of tasks” (Acemoğlu and Restrepo 2019, p.3).

The application of AI in the governance process is known as ‘algorithmic management’(AM) and is defined as “software algorithms that assume managerial functions” (Lee et al 2015, p.1603). To narrow down the different functions within AM, we refer to the management literature to retrieve generally accepted ‘functions of management’2.

We integrate the definitions of Cole and Kelly (2011)3 and Martela (2019)4 by defining the functions of management as:

(1) Goal specification: specifying the vision or objectives of the organisation;

(2) Task specification: specifying the organisation of work necessary to achieve the objectives, including

a. task division: how the whole process is divided into individual tasks;

b. task allocation: how individual tasks are combined and allocated to roles;

c. task coordination: how tasks are coordinated across roles;

(3) Planning specification: specifying the order and timings of tasks, ensuring all the material and human resources are available in the right time and place;

(4) Incentivising behaviour: ensuring that everyone behaves in a way that adheres to the specifications above (in both a controlling and a motivating sense);

(5) Staffing: filling all the roles with people and ensuring that people have the right skills for these roles.

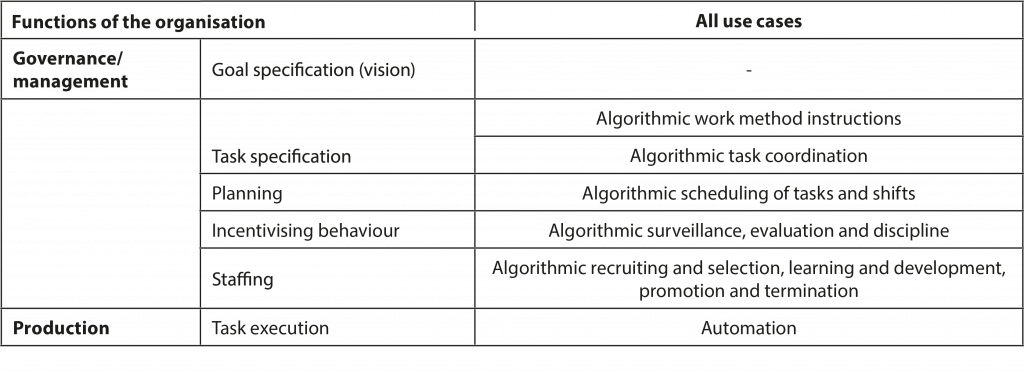

In theory, AI could be used for each of these five management functions. While we didn’t come across any applications specifying the goals or vision of the organisation, we did find many applications in the remaining four management functions, as well as in task execution.

Table 1: Functions of management and AI use cases

Source: Bruegel based on Cole and Kelly (2011), Martela (2019) and Puranam (2018).

Focussing on the organisational function of AI and not on the specific technological product (like a head-worn device) or a specific employment setting (like platform work), brings two benefits.

First, as Bal et al (2021) showed in their review of head-worn devices, the impact of technology varies with its specific application. By skipping the product level, we immediately investigate the level of the organisational function which directly influences job design.

Second, by rising above the specific employment setting, we can say something general about AM both in traditional jobs and in new employment settings. Comparing our typology of AM to others in the literature, we especially want to highlight two:

-Wood (2021) used the classification of Kellogg et al (2020), based on Edwards’ (1979) foundational typology of control mechanisms in organisations: (1) algorithmic direction (what needs to be done, in what order and time period, and with different degrees of accuracy); (2) algorithmic evaluation (the review of workers’ activities to correct mistakes, assess performance, and identify those who are not performing adequately); and (3) algorithmic discipline (the punishment and reward of workers in order to elicit cooperation and enforce compliance). Our approach – from a job-design perspective – separates directions in work method from directions in the timing of work, since method autonomy and scheduling autonomy have different effects on workers’ wellbeing, motivation and stress levels (Breaugh, 1985; De Spiegelaere et al 2016). On the other hand, we combine evaluation and discipline in one category as they are both meant to incentivise behaviour and ensure adherence to the task and planning specification. We therefore consider them the same concept on a scale from soft incentive to hard discipline.

-Parent-Rocheleau and Parker (2021) also developed an AM typology from a job-design perspective, which includes: monitoring, goal setting, performance management, scheduling, compensation and job termination. However, their six defined functions have several overlaps. For example, their definition of monitoring refers to “collecting, storing, or analysing and reporting the actions or performance of individuals or groups”, but they further explain that this data can be used to set goals, assign tasks, set performance targets and evaluate them. We therefore do not include monitoring as a separate function but include it in surveillance of effort and evaluation of performance. Moreover, their definition of management functions is based on current technical capabilities of algorithms, which is likely to be quickly outdated at the current pace of innovation. In contrast, long-standing theories on the functions of management still apply today, despite considerable changes over time in the nature of work.

In our typology, the first three management functions in Table 1 (goal, task and planning specification) can be thought of as only impacting the design of the job, without considering the selection of the person in the job. They determine directly the amount of job demands and resources or control people have over their job, especially control over the work methods, work schedules and work objectives.

Breaugh (1985) identified exactly these three facets of autonomy as distinct concepts5 and they can also be recognised in the European Working Conditions Survey questions6. Finally, empirical research confirms that these different facets of autonomy have different effects on workers (De Spiegelaere et al 2016).

The final two management functions in Table 1 consider the alignment of a person with the job. The fourth (incentivising behaviour) can amplify the effects of the first three by squeezing out any room for manoeuvre that might have been left in the original job design.

The fifth (staffing) matters from an inclusion perspective as it determines who gets put in which job. Given that discrimination in staffing functions (recruitment and selection, learning and development, promotion and termination) has been extensively documented elsewhere7, we do not include this use case in our review.

In practice, algorithmic management algorithms often serve several of these managerial functions at once. The most obvious example is that all management algorithms also automate away tasks of human managers, such as scheduling or supervision.

But more nuanced overlaps exist as well, such as task assignment-algorithms that simultaneously keep track of the speed of execution in order to allocate the following task in a timely matter (Reyes 2018).

4 Five AI use cases in automation and algorithmic management

For each of the AI use cases, we gathered current scientific evidence on mechanisms of impact on various aspects of job content and the implications for social, physical and contractual working conditions.

We used the job demands-resources model (Demerouti et al 2001) to assess how AI impacts job content, including work intensity (workload, pace, interdependence), emotional demands, autonomy, skills use, task variety, identity and significance.

Papers in the review are placed at the intersection of computer science and psychology, sociology and management science. They include summary papers and reports, scientific literature reviews, micro-level empirical research using panel data, qualitative case studies of organisations or workers exposed to automation or governance AI, and books and news articles.

We identified the specific organisational function of the algorithmic system in each research setting. Most case studies investigate entire algorithmic systems that often exhibit multiple features belonging to different managerial functions, which we separated and analysed individually.

Automation

Automation has been the primary purpose of technology adoption in the past. Today, AI and other smart technologies can perform a wider range of tasks than previous automation technology, including routine and repetitive tasks, and also non-routine cognitive and analytical tasks.

AI increasingly enables workers and robots to collaborate at closer physical proximity by reducing safety risks associated with close human-machine interaction (Gualtieri et al 2021; Cohen et al 2022).

Since currently hardly any jobs can be fully automated, most workers will experience a reallocation and rebundling of the tasks that together form their occupations. Implications for job quality depend on the (re)composition of those tasks.

If technology takes over simple, tedious or repetitive tasks, workers can spend more time on complex assignments that require human-specific knowledge, including judgment, creativity and interpersonal skills.

Complex and challenging work implies a better use of skills and is associated with higher job satisfaction. Yet, permanently high cognitive demands raise work-related stress, since mental relief from handling ‘simple’ tasks disappears (Yamamoto 2019).

The automation of analytical or operational tasks may induce a shift from active work to passive monitoring jobs which are associated with mental exhaustion (Parker and Grote 2020). This exhaustion results from the need to pay close attention to processes requiring little to no intervention, while engaging tasks have been taken away.

Negative consequences for operational skills and work performance are well-documented, for example among aircraft pilots and in relation to autonomous vehicles (Haslbeck and Hoermann 2016; Stanton 2019).

Former operators who become supervisors of machines or algorithms gradually lose their skills and operational understanding. As a result, their ability to detect errors or perform tasks in case of system failures degrades, undermining their task control abilities and cultivating technological dependence (Parker and Grote 2020).

Collaborating with automation technology, as opposed to supervising it, has different implications for job quality. Robotisation in general is associated with work intensification as workers adapt to the machines’ work pace and volume, and tasks become more interdependent (Antón Pérez et al 2021). Work may become more repetitive and narrower in scope, reducing task discretion and autonomy (Findlay et al 2017).

Moreover, the integration of automation technology in workflows promotes task fragmentation, which reduces task significance by separating workers’ tasks from larger organisational outcomes and goals (Evans and Kitchin 2018).

At the same time the effects of human-robot collaboration on mental stress are not straightforward: some studies find that collaborating with robots is stressful for workers (Arai et al 2010), while others find no such effect (Berx et al 2021).

Automation-induced changes in job content often imply changes in the physical working environment. When technology is used to automate dirty, dangerous and strenuous tasks it may alleviate occupational health and safety risks (physical strain, musculoskeletal disorders, accidents) (Gutelius and Theodore 2019).

Meanwhile, if AI integration leads to more desk and computer work it may also exacerbate health risks associated with sedentary behaviour (Parker and Grote 2020; PAI 2020)8. Then, the addition of autonomous machines to workplaces may pose new dangers to worker safety, from collision risks to malfunctions due to sensor degradation or data input problems (Moore, 2019).

The effects of task reallocations can also spill over to the social work environment. Automation of some tasks may allow more collaboration with colleagues or closer interactions with supervisors, or workers may spend more time on client-facing activities (Grennan and Michaely 2020).

At the same time, working in a highly automated environment as opposed to working with humans can lead to greater workplace isolation (Findlay et al 2017).

On contractual implications, the continued automatability of tasks increasingly enables firms to replace (expensive) labour with (cheaper) capital inputs. In the short term, AI-powered automation likely reduces labour demand for certain tasks and thereby occupations, driving down wages (Acemoglu and Restrepo 2020).

This effect is more pronounced in low-skill jobs, characterised by repetitive tasks, or where skill content declines due to automation, as labour input becomes more replaceable (Graetz and Michaels 2018). For workers exposed to automation, this implies lower job security and deteriorating career prospects, adversely affecting mental health (Patel et al 2018; Abeliansky and Beulman 2021; Schwabe and Castellacci 2020).

At the same time, automation may stimulate labour productivity growth and raise real wages through lower output prices (Graetz and Michaels 2018).

Grennan and Michaely (2020) showed that similar patterns also apply to automation of knowledge work among financial analysts. Increased exposure to automation from AI-powered prediction analytics leads to worker displacement, task reallocation towards more creative and social tasks, and improved quality of work (lower bias), but lower remuneration.

Algorithmic scheduling of shifts and tasks

Algorithmic planning automates the process of structuring work through time. Two forms of technology-enabled planning can be distinguished: (1) algorithmic shift scheduling, meaning the assignment of work shifts based on legal boundaries, staff availability and predicted labour demand; and (2) algorithmic task scheduling, meaning setting the order of tasks, the pace of execution and updating sequences in response to changing conditions.

Algorithmic support is useful in complex and heavily regulated scheduling environments such as healthcare (Uhde et al 2020). Elsewhere, algorithmic scheduling is growing increasingly popular for its cost-saving properties (Mateescu and Nguyen 2019b), especially in hospitality and retail (Williams et al 2018).

Using historical data on weather, foot traffic or promotions, AI systems can forecast labour needs with growing accuracy to prevent overstaffing during periods of low demand. This implies that there are just enough staff present to get the work done at any given time. As a result, work intensity during shifts rises, since they do not include periods of low activity (Guendelsberger 2019).

The fact that prediction accuracy increases closer to the date in question incentivises short-notice schedules. In one case study of a food distribution centre, workers received a text message in the morning that either confirmed or cancelled their shift for the same day, based on their previous shift’s performance9 (Gent, 2018).

Furthermore, real-time monitoring of conditions allows incremental scheduling adjustments in response to sudden changes, relying on workers on on-call shifts.

Consequently, algorithmically generated schedules tend to be unpredictable, often published only few days in advance; inconsistent, varying considerable from week to week; and inadequate, assigning fewer hours than preferred, leading to high rates of underemployment (Williams et al 2018).

This leaves workers with considerable working time and income insecurity. It also places a lot of strain on the organisation of non-work life, particularly for people with caregiving responsibilities (Golden 2015)

The consequences for non-work life are far-reaching: Harknett et al (2019) detailed the adverse health effects of unpredictable schedules, including sleep deprivation and psychological distress, and how exposure to unstable schedules affects workers’ young children who suffer from heightened anxiety and behavioural problems.

These effects are often aggravated by poor system design choices, such as not allowing for autonomous revisions or shift switching among colleagues, reducing worker control (Parent-Rocheleau and Parker 2021) and harming team morale (Uhde et al 2020).

Crucially, in retail stores, stable schedules have been shown to ultimately benefit productivity and sales by raising staff retention rates (Williams et al 2018).

Algorithmic scheduling is not limited to offline work. On food delivery platforms such as Deliveroo and Foodora, the number of shifts is algorithmically determined one week in advance, based on forecast demand for various geographic zones and timeslots (Ivanova et al 2018).

Rider’s access to these shifts however depends on performance-based categorisation10: the top third of workers get to choose first, while the bottom third must take the remaining shifts. This significantly reduces workers’ working time flexibility typically associated with, and heavily advertised, in platform work.

It also causes underemployment and income insecurity, as some workers are unable to pick up as many shifts as they want. Since schedules are based on expected demand, fluctuations can lead to acute workload pressure and create safety risks if workers are nudged to come online in particularly adverse conditions (Gregory 2021; Parent-Rocheleau and Parker 2021).

Even ride-hailing platforms which don’t operate under a formal scheduling system feature soft controls aimed at influencing drivers’ working time and thus undermining their autonomy (Lee et al 2015).

Uber drivers, for example, receive messages that nudge them to log on, or stay logged on, at times when the algorithm predicts high demand, which leads workers to be on-call without any guarantees they will receive ride requests (Rosenblat and Stark 2016).

Beyond shift planning, algorithmic scheduling of tasks within shifts aims to optimise workflows, reduce disruption and increase efficiency. In manufacturing or logistics, for example, optimised timing of order releases or sequencing of production orders can alleviate pressure in high-intensity work environments (Briône 2017; Gutelius and Theodore 2019).

However, ordering tasks algorithmically also reduces workers’ autonomy to organise their work in a way they see fit (Briône 2017), and instantaneous task assignments may accelerate the pace of work (Gutelius and Theodore 2019).

Reyes (2018) described the implications of a room-assignment algorithm for housekeepers in a large hotel. The system prioritised room turnover and assigned rooms to housekeepers in real-time once guests checked out.

For housekeepers, who previously were assigned individual floors to clean during the day, the inability to self-determine the sequence of rooms led to an increase in workload and time pressure, as the algorithm sent them across different floors and sections of the hotel, not accounting for their heavy equipment.

Algorithmic work method instructions

Work method instructions refer to the algorithmic provision of information to workers on how to execute their work, often in real-time and personalised to the workers’ current activity. The purpose is typically to raise the quality of output, by reducing the probability of human error or by lowering the skills required to perform a certain task.

The practice ranges from enabling access to contextually relevant information to giving instant feedback to delivering live instructions about methods.

Enabling access to relevant information increases autonomy, as it builds capacity for decentralised decision-making at a local level. In contrast to communication technology, which tends to promote reliance on others for decisions, information technology empowers agents to handle tasks more autonomously (Bloom et al 2014).

Case studies support this finding, provided that the information is relevant, and workers can decide freely whether or not to use it (Bal et al 2021). A study on patient monitoring among anaesthetists found that providing doctors with smart glasses that superimpose patients’ vital signs onto their field of vision reduced the need to multitask (monitoring multiple sources of information), reducing mental strain (Drake-Brockman et al 2016).

In fact, participants found the device so useful that when asked how to improve it, the most frequent answer was for more information to be displayed. Access to contextual information can also improve workers’ safety: AI-enhanced personal protective equipment can monitor and evaluate environmental conditions including temperature, oxygen levels or toxic fumes, thus helping workers, like firefighters, navigate high-risk work environments (Thierbach 2020).

Conversely, the absence of information can reduce worker control. Ride-hailing apps, for example, alert drivers to areas with higher prices in order to encourage them to move to those areas and satisfy excess demand.

Drivers are free to choose to follow or ignore this information, giving them autonomy over whether or not to exploit fare-price variations (Lee et al, 2015). At the same time, when offered a ride request, drivers do not receive crucial information about the destination or the fare before having to decide (15 seconds) (Lee et al 2015; Rosenblat and Stark 2016).

The same holds for food-delivery workers at Deliveroo and Foodora, who do not know the address of the customer when they accept restaurant pick-ups (Ivanova et al 2018). Workers’ capacity to determine which rides are worthwhile is limited by this design choice.

AI-supported personalised, real-time feedback can support on-the-job learning (Parker and Grote 2020) and clarify role expectations (Parent-Rocheleau and Parker 2021). The installation of an intelligent transportation system in London buses gave drivers real-time feedback on their driving behaviour (speed, braking, etc.).

While this algorithmic monitoring and evaluation did reduce their work method discretion, workers also described the feedback as helpful to inform better driving practices in adverse conditions, and used the information to learn and improve safe driving behaviour (Pritchard et al 2015).

Real-time, personalized feedback can also benefit workers’ health. Wearables equipped with smart sensors can monitor body movements like twisting or bending, identify unsafe movements and detect hazardous kinetic patterns (Nath et al 2017; Valero et al 2016).

Workers are notified about the occurrence of dangerous movements either via instant alerts, such as vibrations or beeping, or through summary statistics at the end of the workday, which can help to identify and prevent potentially risky habits (Valero et al 2016)11.

Experts warn, however, that the permanent monitoring of movement could also be used to track work efforts and breaks, contributing to a permanent state of surveillance that undermines workers’ autonomy and raises stress levels (Gutelius and Theodore 2019).

Crucially, the distinction between learning-supportive algorithmic feedback provision, and prescriptive automated work instructions can be fuzzy. Voice recognition systems at call centres monitor customers’ and agents’ conversations for emotional cues and provide feedback on the appropriateness of operators’ responses (see eg. Hernandez and Strong, 2018; De la Garza 2019).

Feedback comes in the shape of instructions: to talk more slowly, display higher alertness or say something empathetic, to improve the customer’s experience. This not only reduces worker discretion over how to respond to customers, it also removes the need for emotional and interpersonal skills to judge a customer’s mood and choose how to react to it (Parent-Rocheleau and Parker 2021).

Work-method instructions also come in more explicit and prescriptive forms, typically transmitted through wearable or handheld devices. In order-picking, voice- or vision-directed applications provide step-by-step instructions to workers, navigating them through the warehouse with the goal of increasing efficiency by reducing the time spent on low-value activities like walking (Gutelius and Theodore 2019).

The practice of ‘chaotic storage’, meaning the storage of inventory without an apparent system enabled by the digital recording of items’ location, raises worker dependency on the devices’ instructions to find and collect items, and prevents the acquisition of organisational knowledge (Delfanti 2021).

The main consequence is work intensification, as continuous instructions and instant initiation of the next task accelerates the work speed and ensures workers’ attention is permanently focused on the task at hand (Gutelius and Theodore 2019).

Integrating AI-driven instructions into manufacturing workflows induces task standardisation and accommodates lot-size manufacturing (Moore, 2019; Parker and Grote 2020). Smart glasses or other devices are used to carry out on-the-spot production tasks, especially in the case of smaller orders of customised products. The glasses provide on-the-spot instructions, guiding the user in executing a task that is only done once to produce a specific order.

While this shortens learning curves and improves learnability in complex work environments, it can also lead to skill devaluation and obsolescence (Bal et al 2021). Workers need fewer pre-existing skills to perform the job and do not acquire new, long-term skills on the job, as they are always told exactly what to do and when to do it without necessarily knowing why.

The social and contractual implications are similar across all types of work-method direction. First, they reduce social interactions at work (Gutelius and Theodore 2019; Bal et al 2021). As each and every step needed to perform a task is readily displayed, the need to collaborate to solve problems disappears.

And since workers’ attention is fixed on the virtual device, other communication between colleagues decreases, too (Moore 2019). Second, lower job complexity alleviates entry barriers for less-skilled workers, but increases worker replaceability.

This enables growing reliance on labour brokers and temp workers, thereby harming job security and career prospects, and placing downward pressure on wages. (Gutelius and Theodore 2019).

It is therefore critical to distinguish between technologies that augment workers’ decision-making capacity and support learning, and those that replace human decision-making at work.

Algorithmic surveillance, evaluation and discipline

Surveillance has always been an important means of control in the workplace. Digital technologies including CCTV and e-mail have made surveillance of the workspace more ubiquitous, but have still confined it to surveillance-by-observation (Edwards et al 2018).

Cheap monitoring tools combined with data availability and computing power have given rise to surveillance-by-data collection (Mateescu and Nguyen 2019a). Sensors, GPS beacons and other smart devices can continuously extract granular, previously unmeasurable, information about workers, and feed into algorithmic or human workforce management decision (Edwards et al 2018; Mateescu and Nguyen 2019a).

The motivations of organisations in adopting these types of monitoring practices are multifaceted and range from “protecting assets and trade secrets, managing risk, controlling costs, enforcing protocols, increasing worker efficiency, or guarding against legal liability” (Mateescu and Nguyen 2019a, p.4).

In itself, ubiquitous monitoring lowers job quality, as feelings of constant surveillance can cause stress, raise questions of data privacy, undermine workers’ trust in the organisation (Moore and Akhtar 2016) and make them feel powerless and disconnected (Parker and Grote 2020).

Continuous monitoring places excessive pressure on workers to follow protocols exactly, curbing work-method discretion. UPS drivers reported how delivery vehicles equipped with a myriad of sensors now track every second of their day, including when they turn the key in the ignition, fasten their seatbelt or open the door.

As a result, workers are held accountable for minor deviations from a protocol designed to optimise efficiency and safety, such as turning on the car before fastening the seatbelt (which is considered a waste of fuel) (Bruder 2015).

Minute-by-minute records of work activities can also be used to redefine paid worktime by enabling employers to exclude time in which workers don’t actively engage in (measurable) task execution (Mateescu and Nguyen, 2019a). This has consequences for workers’ income security and incentivises overwork.

In the UK home-care sector, Moore and Hayes (2018) found that the use of a time-tracking system, which defined work hours only as contact time, led to a decrease of paid worktime, an increase in hours worked and less autonomy for care workers, as time restrictions prevented independent judgement over how much care a patient needed.

This practice is also well-documented in the warehousing industry, where handheld devices meant for scanning products and workstations (so-called ‘guns’) are used to keep track of work productivity and “time off task” (TOT) (Gurley 2022).

Workers’ TOT begins as soon as the last item is scanned and ends only once the next item is registered. Since even small increments of ‘idle’ time must be justified, workers feel unable to take full breaks because walking out of the vast warehouse takes too much TOT (Burin 2019).

The effects of monitoring are aggravated once collected data serves as an input to algorithmic performance evaluations and discipline. Data-based, ‘objective’ performance evaluations promise to mitigate human bias and promote equity and fairness at work, but the objectivity and fairness of algorithmic decision-making is contested (Briône 2020; Parker and Grote 2020; PAI 2020).

Performance scores are typically based on collections of granular metrics reflecting work volume, quality and in some cases customer ratings, evaluated against some benchmark or target (Wood 2021). Algorithmic discipline refers to the automatic punishment or reward based on worker’s recorded performances.

In practice, there are varying degrees of automating worker evaluation and discipline, ranging from algorithmic scores contributing to managers’ overall assessments, to the fully automated execution of disciplinary and reward measures.

In combination, algorithmic evaluation and discipline are often used to erect an incentive architecture that elicits worker behaviour that aligns with an organisation’s interest.

In platform work particularly, a near-fully automated system of evaluation and discipline severely undermines workers’ decision latitude. Drivers’ performance scores at ride-hailing apps Uber and Lyft are based on metrics including their ride-acceptance rate (above 80 percent), cancellation rate (below 5 percent), customer ratings (above 4.6) (Rosenblat and Stark 2016; Lee et al 2015) and driving behaviour (acceleration, speed and braking) (Kellogg et al 2020).

If they fail to achieve benchmarks, they risk automatic, temporary suspension or permanent deactivation from the platform (Lee et al 2015). At the same time, Uber offers occasional promotions, such as guaranteed hourly pay, for high- performing drivers (although the exact conditions for receiving the promotion are opaque) (Lee et al 2015; Rosenblat and Stark 2016).

Thus, workers face dual-control mechanisms – job insecurity on the one hand and better earnings on the other – that limit their discretion over which rides to accept or whether to cancel unprofitable ones.

Customer ratings increasingly feed into workers’ algorithmic performance evaluations, even in traditional, service occupations such as food services, hospitality or retail (Orlikowski and Scott 2014; O’Donovan, 2018; Levy and Barocas 2018; Evans and Kitchin 2018).

Workers may feel like they are under constant surveillance by customers and must present a friendly demeanour at all times, adding to the existing emotional demands of service occupations. Workers may also feel the need to comply with clients’ demands at the expense of safety for fear of receiving a poor rating (Mateescu and Nguyen 2019b).

Workers may engage in significant preparatory and emotional labour to make customers happy: ride-hail drivers supply bottled water or phone chargers, and observe and judge passengers’ moods to decide if they should make conversation or not (Rosenblat and Stark 2016).

Nonetheless, customer ratings are often perceived as idiosyncratic, reflecting not only the quality of the ride but also circumstances beyond drivers’ control, such as high prices, traffic, the mental state of the customer (Lee et al 2015) or customers’ biases (Rosenblat et al 2017).

While platform work is the most prominent example for the use of algorithmic evaluation and discipline, the practice is also gaining ground in traditional employment, including retail (Evans and Kitchin, 2018), call centres (Hernandez and Strong 2018), public transport (Pritchard et al 2015), parcel delivery (Bruder 2015), trucking (Levy 2015) and most importantly, warehousing (see eg. Mac 2012; Liao 2018; Gent 2018; Bloodworth 2019; Burin 2019; Guendelsberger 2019; Delfanti 2021; Gurley 2022).

While fully automated disciplinary actions are less common in these settings (Amazon’s automated firing algorithm made headlines in 2019 (Lecher 2019)), algorithmic performance analytics often play a critical role in supervisors’ decisions (Wood 2021), or subpar performance is flagged to a supervisor instantaneously (Burin 2019; Gurley 2022; Hernandez and Strong 2018).

Moreover, performance metrics are used in other ways to incentivise work effort and raise productivity. Increasingly, organisations are introducing game-like elements to performance tracking, creating high- pressure work environments. Workers may be shown a countdown that tracks how many seconds they have left to finish their task (Guendelsberger 2019; Bruder 2015; Gent 2018).

Visual, haptic or sound alerts that signal missed targets or low performance make workers aware they are being evaluated (Pritchard et al 2015; Guendelsberger 2019; Gabriele 2018). Dashboards that track workers’ progress and rank co-workers against each other introduce competitive dynamics in an attempt to stimulate effort, but undermine social support among colleagues (Pritchard et al 2015; Leclerq-Vandelanoitte 2017; Gutelius and Theodore 2019).

Similarly, the use of top-performers’ scores as productivity targets for their co-workers causes further work intensification (Burin 2019; Guendelsberger 2019). These high-pressure work environments and constant reminders of surveillance create fears of repercussions and incentivise workers to “beat the system” (Ajunwa et al 2017).

In order to achieve their SPORH targets (stops per on-road hour), UPS drivers circumvent safety protocols, putting themselves and others in danger (Bruder 2015). Levy (2015) found that truck drivers felt so pressured by a performance-monitoring system, they skipped breaks, safety checks and sleep in order to make up lost time in traffic or during loading and unloading.

Skipping safety measures is an unintentional result of surveillance being always incomplete, which is one of the biggest shortcomings of datafied performance assessments. The practice automatically gives more importance to quantified aspects of a job over more difficult-to-capture parts of work (Mateescu and Nguyen 2019a).

In order to meet performance targets, workers shift their efforts towards these quantifiable activities at the expense of others, reducing not only their task variety but also their autonomy to organise and prioritise.

In Evans and Kitchin’s study (2018) of a large Irish retailer, interactive emotional work was not captured by the big data infrastructure. Time spent on customer service does not just go unrecognised by the system, it also impacts negatively other performance metrics.

As a result, workers reorient their efforts to become ‘data-satisfying’ rather than customer-centric, and describe customer service as a ‘thankless task’ (p.8). In certain settings, such as care work, such an incomprehensive definition of performance diminishes task identity and meaningfulness by devaluing the relational aspects of care, like companionship (Moore and Hayes 2018).

Defining a job in terms of excessively abstract data on fragmented, quantified output impedes a shared understanding of the work environment, with consequences for worker engagement and the relationship to supervisors and the organisation (Evans and Kitchin 2018).

Algorithmic coordination across tasks

The coordination of interdependent tasks, the collective or consecutive execution of which leads to the completion of a certain product or workflow, is a key function of organisations. Coordination of tasks might be needed across time – when one task needs to follow another – or across methods – when the execution of one task depends on how another was executed.

AI is used both to support coordination still done by humans and to automate it altogether, no longer needing a human in the loop.

Algorithmic support for task coordination is taking shape in the augmentation of communication technology (CT) at work, which is widely believed to have had ambiguous effects on job quality (Day et al 2019).

On the one hand, the use of CT has raised the efficiency of tasks and communication substantially (Ter Hoeven et al 2016) and has enhanced workers’ autonomy over when and where to work. On the other hand, CT allows decision-making to be centralised and could thus be associated with less autonomy over how to do the work (Bloom et al 2014).

CT also increases expectations that workers will be available and accessible at any time (Leclerq-Vandelanoitte 2015), and leads to less predictable workloads and more frequent interruptions (Ter Hoeven et al 2016).

AI likely amplifies all of these existing effects from CT. For example, augmented reality-enhanced glasses that provide point- of-view footage during remote collaboration could facilitate human task coordination (Bal et al 2021). But creating virtual office spaces using web3 applications may also exacerbate the always-on culture associated with digital communication technology.

Complete automation of task coordination using algorithms is widespread in online labour platforms, in particular localised gig work and online piece work. By automating the full coordination process between tasks done by different people in time-sensitive workflows (like ordering, preparing and delivering food), algorithms have taken over one of the key purposes of organisations.

No longer needing human coordination, platforms lead to the outsourcing of individual fragmented tasks to the market. These tasks often have low task identity; online piece work especially is often boring, repetitive or emotionally disturbing (Moore 2019).

Even though the lack of human management support may lead to a sense of isolation among some workers (Parker and Grote 2020), others may see this as an advantage. In Ivanova et al (2018) food delivery drivers described not having a boss ordering them around or monitoring them as a key benefit of working on a platform.

They also did not mind low task identity (ie. their work not being part of an organisational outcome), because this eliminates the emotional demands that come with dealing with customers or other workers in a hierarchy.

Contractual conditions are clearly impacted, as platform workers are often self-employed. This offers, in theory, greater flexibility in terms of workplace and time than standard forms of employment, but requires maintaining social security and insurance coverage independently.

Control over working time may be undermined by extremely low pay: while workers are in theory free to choose how much to work, in practice pay can be so low that many work permanently in order to make a living (Lehdonvirta 2018).

Moreover, since the volume of tasks assigned by the platform depends on demand and may fluctuate significantly from one day to another, self-employed platform workers may face significant job and pay insecurity (Lehdonvirta 2018; Parent-Rocheleau and Parker 2021).

The increased task fragmentation on platforms, and the quantification of tasks at the most granular level implies that only explicit and productive working time is paid. By engaging workers only for the execution of a specific task, all preparatory labour that these workers may engage in to improve their reputations and ratings (eg. cleaning their car) or investments in skill development or infrastructure (eg. internet connection, computer setup) are unpaid, risking underpay and incentivising overwork (Moore 2019).

As labour demand under algorithmic task coordination grows, the use of precarious employment forms and zero-hour contracts may accelerate, excluding a growing share of the workforce from social security and other benefits of traditional employment (Moore 2019; PAI 2020; Parent-Rocheleau and Parker 2021).

Enabling task coordination at a global level may also give rise to the 24-hour economy, increasing commodification of labour (Moore 2019) and globally competing labour markets, placing further downward pressure on wages in high-income economies (Beerepoot and Lambregts 2015).

5 Improving technology design through worker participation and ethical principles

Technology is a product of power

The previously described effects of AI on job quality are not technologically predetermined but are the result of choices made by technology designers (AI developers) and job designers (managers) in response to economic, social and political incentives.

The absence of technological determinism for socio-economic outcomes was already elegantly argued by Heilbroner in 1967 and is widely accepted by now in the scientific literature (Vereycken et al 2021).

Therefore, harmful effects of AI in the workplace result from deficient design, arising either from unreliable data or the designer’s intention in constructing the algorithm.

Much of the debate on biases in AI focusses on the data aspect: incomplete, non-representative or historically discriminatory patterns in datasets used to develop AI might perpetuate undesirable social outcomes.

Just de-biasing datasets, however, is not sufficient and policy responses should move beyond mere technocentric solutions and consider the wider social structures (including power structures) in which the technology is deployed (Balayn and Gürses 2021).

Even with unbiased datasets, technology development is a product of power in organisations and therefore replicates existing power dynamics in society. This power imbalance in technology development is apparent across gender and race, as big tech has a notorious lack of diversity (Myers West et al 2019).

But it is pervasive also across socio-economic classes, as highly educated managers and technologists source and design software to control workers at the bottom of the corporate hierarchy. Pervasive data collection and algorithmic management applications are often implemented first in low-wage occupations characterised by low worker bargaining power (Parker and Grote 2020).

The adoption of those technologies further undermines power dynamics (Mateescu and Nguyen 2019a, 2019b), through deskilling effects, information asymmetry and lower efficiency wages12 from surveillance.

As a result, disadvantaged groups in society suffer more of the negative consequences of AI. For example, workers of colour, and particularly women of colour, in retail and food service occupations, are exposed disproportionally to just-in-time scheduling (Storer et al 2019).

The resulting unpredictable schedules are harmful for health and economic security (Harknett et al 2021), increase hunger and other material hardship (Schneider and Harknett 2021) and have intergenerational consequences through unstable childcare arrangements (Harknett et al 2019).

Worker participation mitigates the negative consequences of AI adoption

Meaningful worker participation in the adoption of workplace AI is critical to mitigate some of this power imbalance. It cannot be left to individuals to comprehend, assess and contest the applied technology, so collective interest representation and unions have a critical role to play (Colclough 2020; De Stefano 2020).

Employee participation in the adoption process varies in terms of timing and influence (Vereycken et al 2021): workers may get involved during early-adoption stages such as application design or selection, or be limited to implementation or debugging, and their influence may range from sharing their opinions with management to formal decision-making rights.

The earlier workers are involved, the greater their say and the greater the chance that their perspectives are incorporated into new technologies. At the very least, this can help to protect contractual working conditions.

For example, Findlay et al (2017) described how during the partial automation of a pharmaceutical dispensary, unions safeguarded workers’ contracts and remuneration. Worker participation should extend beyond the adoption process to the co-governance of algorithmic systems.

Colclough’s (2020) co-governance model follows the human-in-command principle and entails regular assessments that enable the identification of initially unintended consequences, and adjustments of the system to mitigate them.

Worker participation in technology implementation also benefits employers. Workers have a better understanding of their jobs than managers or technology developers. Leveraging this specific knowledge about their jobs, what work they entail and how good performance can be assessed improves algorithmic system design.

For example, housekeepers knew that hotel guests preferred their rooms to be cleaned by the time they return in the afternoon, and therefore prioritised cleaning theirs over rooms of guests who had checked out.

However, the algorithm assigned rooms to maximise room turnover, so that staff were unable to clean the rooms of current guests in time, triggering complaints (Reyes 2018).

Similarly, bus drivers understood that their performance evaluation system’s definition of ‘good driving’ was flawed, since a poor score from breaking abruptly can signal safe driving when reacting to avoid an accident (Pritchard et al 2015).

These misspecifications make algorithmic systems less useful to workers and undermine acceptance (Hoffmann and Nurski 2021) They also incentivise workarounds. Bus drivers, for example, would not activate the system when no passengers were on board, or would deactivate it briefly to prevent it from recording a traffic event (Pritchard et al 2015).

Similarly, according to Lee et al (2015), Uber drivers circumvented ride allocations by logging out when driving through bad neighbourhoods to avoid penalties from declining ride requests.

To sum up, worker participation can ensure that algorithms are not imposed on the workforce but adopted in collaboration with them, and that benefits are shared between employers and employees (Briône 2020).

Ethical AI principles that moderate AI’s impact on job quality

Worker participation is not an end in itself, but a means to ensure that technology design incorporates features that mitigate job quality impact. Parent-Rocheleau and Parker (2021) highlighted three moderators in particular: transparency, fairness and human influence.

Clear explanations about why and how an AI system is used can mitigate adverse effects. Transparency over the system’s existence, the rationale for using it and the process leading to an algorithmic decision (explainability) enhance workers’ understanding of the algorithm governing their work.

Knowledge about the exact activities being monitored, for example, and what this data is used for, strengthens workers’ work-method discretion by enabling them to organise their tasks around the features of the algorithm.

There exists no consensual definition of algorithmic fairness, but widely accepted elements include: 1) absence of bias and discrimination, 2) accuracy and appropriateness of decisions, 3) relevance, reasonableness or legitimacy of the inputs into decision-making, and 4) privacy of data and decisions (Parent-Rocheleau and Parker 2021).

The examples in section 4 illustrate how violations of these principles undermine job quality and worker acceptance of algorithmic management. Biased customer ratings introduce discrimination to performance evaluations (Rosenblat et al 2017) and public worker rankings undermine team morale (Gutelius and Theodore 2019).

The failure to account for road conditions and the quality of vehicles led to inaccurate performance scores for bus drivers in London, causing overarching suspicion of the system’s reliability (Pritchard et al 2015).

Moreover, perceptions of organisational fairness in the workplace are situation-dependent, as illustrated in a case study by Uhde et al (2018) of a fair scheduling system in the healthcare sector, and highlight the importance of human rather than algorithmic intervention in resolving conflicts.

Empowering workers to exert control over an algorithmic system, for example by intervening in algorithmic decisions (eg. switching shifts with co-workers or declining tasks without penalty), overriding its recommendations or commenting on the collected data, safeguards worker autonomy and helps to overcome initial aversion to algorithmic governance (Parent-Rocheleau and Parker 2021).

Even before its full deployment, a participatory approach in system design that allows workers to give feedback or to influence the choice of parameters factoring into decision-making, will establish a sense of control. Regardless, meaningful human influence requires transparent and effective appeal procedures that empower workers to question, discuss or contest algorithmic decisions, and ensure human responsibility for decisions.

Transparency, fairness and human influence are common principles in AI ethics that extend beyond the scientific literature to policymaking. Other important principles at a global level include non- maleficence, accountability and privacy (Jobin et al 2019).

The OECD adds “robustness, security and safety and inclusive growth, sustainable development and well-being” (OECD 2019), and the EU’s high-level expert group on AI has determined “respect for human autonomy, prevention of harm, fairness and explicability” to be the four guiding principles to ensure ‘trustworthy’ AI (AI HLEG 2019).

6 Policy recommendations

Three European Union legislative initiatives are relevant in the context of AI and job quality: the general data protection regulation (GDPR (EU) 2016/679), the proposed AI Act (a product-safety regulation; European Commission 2021a), and the proposed platform work directive (a labour regulation; European Commission 2021b).

As a privacy regulation, the GPDR affects mainly the monitoring and surveillance aspects described in section 4. In addition to regulating personal data collection, the GDPR also ensures the right not to be subject to fully automated decision-making.

Exceptions to this rule, however, weaken its application in the employment context, for example when automated decision-making is required to enter into or enact a contract (De Stefano 2020). And, although the GDPR safeguards the right to contest algorithmic decisions, this protection is meaningless to workers unless they can demonstrate the violation of an “enforceable legal or ethical decision-making standard” (De Stefano, 2020, p.78).

Without enforceable ethical standards that workers can leverage, meaningful appeal is not safeguarded. Finally, as a privacy regulation, the GDPR accounts primarily for data input, but many adverse consequences of algorithmic management only emerge from data processing and inference once data is collected legally (Aloisi and Garmano 2019).

While the proposed AI Act has a broader focus that just the workplace, it does list employment as one of the eight high-risk areas that will be subject to strict requirements for providers and users, such as ex-ante impact and conformity assessments. From the perspective of this paper, the proposal has the following crucial gaps.

First, it is unclear how the roles of providers and users would translate to the workplace where there would be more parties involved (ie. the developer, the employer and the employee). While the proposal states that employees should not be considered users (see §36), it is unclear how the responsibilities are shared between the developer of the system and the employer.

Developer and employer could be two different parties, if the system is sourced from the market, or the same party, if the system is developed in-house.

Second, self-assessment by the provider of high-risk AI systems is not sufficient in the context of the workplace because of existing power imbalances between employers and employees (De Stefano 2021).

Worker representation and trade unions should be involved in order to assess correctly the potential risks associated with the system. Yet, the proposed regulation does not mention the role of social partners in shaping the use of AI systems at work (De Stefano 2021).

Third, the risks discussed in the proposed AI Act are expressed in terms of risks to health, safety and fundamental rights (see Article 14). As illustrated in this paper, risks from AI systems in the workplace also include threats to mental health, work stress and job quality in all its aspects (including precarious conditions, isolation and control).

De Stefano (2020) made the case for considering workers’ rights in fundamental rights, making labour protection an important tool to safeguard human rights, in particular human dignity, in the workplace.

In contrast to the AI Act, the proposed EU platform work directive is in fact a labour regulation and therefore does emphasise labour relations in this context. Chapter III on algorithmic management is applicable to all persons working through digital platforms, regardless of their employment status (self-employed or employee).

Articles 6 (on transparency), 7 (on human monitoring of automated decisions), 8 (on human review of decisions) and 9 (on worker consultation) correspond more or less to the design principles discussed above on transparency, fairness and human influence, and the mechanism to achieve these principles through worker participation.

However, the fairness aspect could be strengthened in the text. For example, Article 7 requires platforms to evaluate the risks of accidents, psychosocial and ergonomic risks, assess the adequacy of safeguards and introduce preventive and protective measures.

Other unfair effects of algorithmic management on job quality, such as redefinitions of paid work time or imbalanced performance evaluations, could be included here as well.

Finally, algorithmic management in various forms is already pervasive outside of platform work (as documented in section 4). We therefore urge the European Commission to regulate this practice also in traditional sectors of the economy.

In addition to the above comments on ongoing legislative initiatives, we leave some general concerns about the potential impact of AI on job quality. First, there is a risk of increasing polarisation in job quality, as existing occupational and socio-economic status differences are reflected in the way new technologies are designed and thus exacerbate power imbalances in the workplace.

Second, policymakers and employers need to look beyond working conditions into job design (job demands and resources) to understand the full impact of AI on job quality.

Third, different use cases of AI in the workplace have different effects on job quality, but in general, the more prescriptive the use case, the greater the harm to job quality.

Finally, ethical design choices, both in technology design and job design, matter very much and can mitigate many of AI’s potentially harmful effects.

Endnotes

1. Other definitions are in use among policymakers (eg. the OECD AI expert group (OECD, 2019) and the definition used in the proposed EU AI Act (European Commission, 2021a)), but most of them overlap to a large extent.

2. See for example Fayol (1916), Gulick (1937) and Koontz & O’Donnel (1968).

3. Cole and Kelly (2011) defined management as “enabling organisations to set and achieve their objectives by planning, organising and controlling their resources, including gaining the commitment of their employees (motivation)”.

4. Martela (2019), based on Puranam (2018), defined the “universal problems of organising” as division of labour (task division and task allocation), provision of reward (rewarding desired behaviour and eliminating freeriding) and provision of information (direction setting and coordination of interdependent tasks).

5. Work method autonomy: the degree of discretion/choice individuals have regarding the procedures/methods they employ in going about their work. Work scheduling autonomy: the extent to which workers feel they can control the scheduling/sequencing/timing of their work activities. Work criteria autonomy: the degree to which workers can modify or choose the criteria used for evaluating their performance (Breaugh, 1985).

6. Respectively questions Q54B, Q54A/C and Q61C on whether workers can choose or change the methods of work, the order and speed of tasks, and the objectives of their work.

7. See for example Bogen and Rieke (2018), Whittaker et al (2019) and Sánchez-Monedero et al (2020).

8. In 2017, four in 10 workers in the EU performed their work sitting down, ranging from 21 percent in Greece to 55 percent in the Netherlands (source: Eurostat dataset ILC_HCH06). Excessive sitting is associated with health risks including obesity, cardiovascular disease and back pain.

9. We discuss the implications of such incentive systems to shape behaviour in the next section Algorithmic surveillance, evaluation and discipline.

10. See the next section on Algorithmic surveillance, evaluation and discipline.

11. For a more extensive discussion of the use of artificial intelligence technologies to improve health and safety, see Hoffmann and Mariniello (2021).

12. Economic Efficiency Wage Theory assumes that firms pay wages above market clearing levels to increase productivity (when effort cannot be observed or monitoring costs are too high) or reduce employee turnover (when it is expensive to replace employees that quit their job).

References

Abeliansky, A and M Beulmann (2021) ‘Are they coming for us? Industrial robots and the mental health of workers’, Industrial Robots and the Mental Health of Workers, 25 March

Acemoğlu, D and P Restrepo (2019) ‘Automation and new tasks: How technology displaces and reinstates labor’, Journal of Economic Perspectives 33(2): 3-30

Acemoğlu, D and P Restrepo (2020) ‘Robots and jobs: Evidence from US labor markets’, Journal of Political Economy 128(6): 2188-2244

AI HLEG (2019) A definition of AI: Main capabilities and disciplines, High-Level Expert Group on Artificial Intelligence of the European Commission.

Ajunwa, I, K Crawford and J Schultz (2017) ‘Limitless worker surveillance’, California Law Review 105, 735

Akhtar, P and P Moore (2016) ‘The psychosocial impacts of technological change in contemporary workplaces, and trade union responses’, International Journal of Labour Research 8(1/2), 101

Aloisi, A and E Gramano (2019) ‘Artificial Intelligence is watching you at work: digital surveillance, employee monitoring and regulatory issues in the EU context’, Comp. Lab.L. & Pol’y J. 41(95)

Antón Pérez, JI, E Fernández-Macías and R Winter-Ebmer (2021) ‘Does robotization affect job quality? Evidence from European regional labour markets’ (No. 2021/05), JRC Working Papers Series on Labour, Education and Technology

Arai, T, R Kato and M Fujita (2010) ‘Assessment of operator stress induced by robot collaboration in assembly’, CIRP annals, 59(1), 5-8

Autor, DH and MJ Handel (2013) ‘Putting Tasks to the Test: Human Capital, Job Tasks, and Wages’, Journal of Labor Economics 31:S1: S59-S96.

Bal, M, J Benders, S Dhondt and L Vermeerbergen (2021) ‘Head-worn displays and job content: A systematic literature review’, Applied Ergonomics, 91, 103285

Balayn, A, and S Gürses (2021) ‘Beyond Debiasing: Regulating AI and its inequalities’, EDRi report.

Beerepoot, N and B Lambregts (2015) ‘Competition in online job marketplaces: towards a global labour market for outsourcing services?’ Global Networks, 15(2): 236-255

Berx, N, L Pintelon and W Decré (2021) ‘Psychosocial Impact of Collaborating with an Autonomous Mobile Robot: Results of an Exploratory Case Study’, in Companion of the 2021 ACM/IEEE International Conference on Human-Robot Interaction (pp. 280-282)

Bloom, N, L Garicano, R Sadun and J Van Reenen (2014) ‘The distinct effects of information technology and communication technology on firm organization’, Management Science, 60(12): 2859-2885

Bloodworth, J (2019) Hired: Six months undercover in low-wage Britain, Atlantic Books

Bogen, M and A Rieke (2018) ‘Help Wanted: An Examination of Hiring Algorithms, Equity, and Bias’, Upturn, December.

Breaugh, JA (1985) ‘The measurement of work autonomy’, Human Relations 38(6): 551-570

Briône, P (2017) ‘Mind over machines: New technology and employment relations’, ACAS Research Paper.

Briône, P (2020) ‘My boss the algorithm: An ethical look at algorithms in the workplace’, ACAS Research Paper.

Bruder, J (2015) ‘These workers have a new demand: stop watching us’, The Nation, 27 May.

Burin, M (2019) ‘They resent the fact I’m not a robot’, ABC.

Cohen, Y, S Shoval, M Faccio and R. Minto (2022) ‘Deploying cobots in collaborative systems: major considerations and productivity analysis’, International Journal of Production Research, 60(6): 1815- 1831

Colclough, C (2020) ‘Algorithmic systems are a new front line for unions as well as a challenge to workers’ rights to autonomy’, Social Europe 3, September.

Cole, G and P Kelly (2011) Management Theory and Practice, 7th edn. South-Western/Cengage, Andover

Day, A, L Barber and J Tonet (2019) ‘Information communication technology and employee well- being: Understanding the “iParadox Triad” at work’, The Cambridge handbook of technology and employee behavior: 580-607

De Stefano, V (2020) ‘Algorithmic bosses and what to do about them: automation, artificial intelligence and labour protection’, in Economic and policy implications of artificial intelligence (pp. 65-86), Springer, Cham

De Stefano, V (2021) ‘The EU proposed regulation on AI: a threat to labour protection?’ Global Workplace Law & Policy, 16 April.

Delarue, A (2009) ‘Teamwerk: de stress getemd? Een multilevelonderzoek naar het effect van organisatieontwerp en teamwerk op het welbevinden bij werknemers in de metaalindustrie’, mimeo

Delfanti, A (2021) ‘Machinic dispossession and augmented despotism: Digital work in an Amazon warehouse’, New Media & Society, 23(1): 39-55

Demerouti, E, AB Bakker, F Nachreiner and WB Schaufeli (2001) ‘The job demands-resources model of burnout’, Journal of Applied Psychology 86(3), 499

De la Garza, A (2019) ‘This AI software is ‘coaching’ customer service workers. Soon it could be bossing you around, too’, Time, 8. July.

de Sitter, LU (1998) Synergetisch produceren, Van Gorcum

De Spiegelaere, S, G Van Gyes and G Van Hootegem (2016) ‘Not all autonomy is the same. Different dimensions of job autonomy and their relation to work engagement & innovative work behavior’, Human Factors and Ergonomics in Manufacturing & Service Industries, 26(4): 515-527

Drake-Brockman, TF, A Datta and BS von Ungern-Sternberg (2016) ‘Patient monitoring with Google Glass: a pilot study of a novel monitoring technology’, Pediatric Anesthesia, 26(5): 539-546

Edwards, J, L Martin and T Henderson (2018) ‘Employee surveillance: The road to surveillance is paved with good intentions’, mimeo.

Edwards, R (1979) Contested terrain: The transformation of the workplace in the twentieth century, New York: Basic Books

Eurofound (2017) Sixth European Working Conditions Survey – Overview report (2017 update), Publications Office of the European Union, Luxembourg

Eurofound (2021) Working conditions and sustainable work: An analysis using the job quality framework, Challenges and prospects in the EU series, Publications Office of the European Union, Luxembourg

European Commission (2021a) ‘Proposal for a regulation of the European Parliament and of the Council laying down harmonized rules on artificial intelligence (artificial intelligence act) and amending certain union legislative acts’, 2021/0106 (COD)

European Commission (2021b) ‘Proposal for a directive of the European Parliament and of the Council on improving working conditions in platform work’, 2021/0414 (COD)

Evans, L and R Kitchin (2018) ‘A smart place to work? Big data systems, labour, control and modern retail stores’, New Technology, Work and Employment, 33(1): 44-57

Fayol, H (1916) Administration industrielle et générale : prévoyance, organisation, commandement, coordination, contrôle, Dunod H. et Pinat E., Paris

Findlay, P, C Lindsay, J McQuarrie, M Bennie, ED Corcoran, and R Van Der Meer (2017) ‘Employer choice and job quality: Workplace innovation, work redesign, and employee perceptions of job quality in a complex health-care setting’, Work and Occupations, 44(1): 113-136

Gabriele, V (2018) ‘The dark side of gamifying work’, Fastcompany, 1 November.

Gent, C (2018) ‘The politics of algorithmic management. Class composition and everyday struggle in distribution work’, mimeo, University of Warwick.

Golden, L (2015) ‘Irregular work scheduling and its consequences’, Economic Policy Institute Briefing Paper #394.

Graetz, G and G Michaels (2018) ‘Robots at work’, Review of Economics and Statistics, 100(5): 753- 768

Gregory, K (2021) ‘“My life is more valuable than this”: Understanding risk among on-demand food couriers in Edinburgh’, Work, Employment and Society, 35(2): 316-331

Grennan, J and R Michaely (2020) ‘Artificial intelligence and high-skilled work: Evidence from analysts’, Swiss Finance Institute Research Paper (20-84)

Guendelsberger, E (2019) On the clock: what low-wage work did to me and how it drives America insane, Hachette UK

Gulick, L (1937) ‘Notes on the Theory of Organization’, Classics of Organization Theory 3(1937): 87-95

Hernandez, D and J Strong (2018) ‘How computers could make your customer-service calls more human’, The Wall Street Journal, 14 June.

Gualtieri, L, E Rauch and R Vidoni (2021) ‘Emerging research fields in safety and ergonomics in industrial collaborative robotics: A systematic literature review’, Robotics and Computer-Integrated Manufacturing, 67, 101998

Gurley, LK (2022) ‘Internal documents show Amazon’s dystopian system for tracking workers every minute of their shifts’, Vice Motherboard, 2 June.

Gutelius, B and N Theodore (2019) The future of warehouse work: Technological change in the US logistics industry, UC Berkeley Labor Center