EU’s digital competition law can give users more control

Christophe Carugati is an Affiliate Fellow at Bruegel on digital and competition issues

In exchange for the services they provide, large online platforms including Google and Meta collect user data, which is used to offer tailored services, such as personalised advertising. The European Union’s Digital Markets Act (DMA Regulation (EU) 2022/1925) contains provisions limiting how these services can collect and use personal data.

The DMA complements existing laws, such as the EU general data protection regulation (GDPR), which have so far not been implemented as thoroughly as they could have in relation to misleading practices relating to the obtaining of user consent for use of their data.

There is also a growing perception that EU rules on collecting digital data have generated repetitive burdensome requests to users that can lead to ‘consent fatigue’, and there is a risk that the DMA will make the problem even worse.

Implementation of the DMA should therefore ensure that users can consent to the use of their data without being subject to repeated requests and misleading practices.

The DMA effective consumer consent obligation

The DMA only applies to large online platforms acting as ‘gatekeepers’ in some core platform services, for example social networking services like Meta-owned Facebook (Carugati, 2023). The DMA requires them to comply with a list of positive and negative obligations.

In particular, the law restricts how they collect and use personal data, defined as any information relating to an identified or identifiable natural person (Articles 5(2) and 2(25) DMA). The restrictions prevent gatekeepers from performing certain practices unless users consent pursuant to the GDPR. Consent must be freely given, specific, informed and unambiguous (Article (5)(2) and recital 37 DMA).

In particular, under the DMA, without user consent, gatekeepers cannot:

-Process personal data obtained from third-party services that use the gatekeepers’ services to provide online advertising. For instance, a gatekeeper cannot process data from match-owned Tinder to provide personalised advertising.

-Combine personal data collected for one service with data from other services, including its own and those of third parties. For example, a gatekeeper cannot combine data from a search engine service with another service.

-Use personal data collected for one service for other services they provide. For instance, a gatekeeper cannot use data from an intermediation service like a marketplace within another service.

-Sign in users of one service into another service that it provides to combine personal data. For example, a gatekeeper cannot use data from a user who is signed into a search engine and a map application to combine data.

The DMA applies without prejudice to the GDPR, which applies to all firms and not only gatekeepers, and which also governs how firms can collect and use personal data.

However, the DMA imposes stricter obligations on gatekeepers to ease market entry (contestability) and to redress the advantage gatekeepers have over small and medium-sized firms (fairness) thanks to access to vast amounts of data collected by gatekeepers. EU legislators wanted to impose these stricter restrictions to prevent certain practices from happening in the first place.

The DMA does not make the GDPR redundant. On the contrary, the DMA specifies how gatekeeper firms can collect and use personal data in connection with the above-listed practices. Its specifications go beyond the GDPR in two ways:

First, the DMA specifies the legal bases gatekeepers can rely on to collect and process personal data. Under the GDPR, firms can process data under six legal bases, including consent, contract, to fulfil legal obligations, the vital interest of the user, public interest and the firm’s legitimate interest (Article 6 GDPR)1.

In practice, firms rely mainly on consent, contract and legitimate interest. The DMA mandates that gatekeepers use consent. They can use, where applicable, all other permitted legal bases except legitimate interest and contract to circumvent consent.

The DMA does not make the GDPR redundant. On the contrary, the DMA specifies how gatekeeper firms can collect and use personal data in connection with the above-listed practices

Second, the DMA specifies how gatekeepers should request user consent. In line with the GDPR, it should be done in a user-friendly way, and should be explicit, clear and straightforward. Consent should be an affirmative action and not be more difficult than not giving consent.

Similarly, withdrawing consent should be as easy as giving consent. The DMA offers three additional specifications that go beyond these GDPR requirements (Recitals 36 and 37 and Article 13 DMA):

Gatekeepers should not use an interface design that deceives or manipulates users into giving consent.

Gatekeepers should not re-request consent for the same purpose more than once a year when the user has not consented or has withdrawn her consent.

If users do not consent, gatekeepers should propose an equivalent, less-personalised alternative of the same quality (a lower level of service is possible if it is a direct consequence of the inability to process data), without making functionalities conditional on the user’s consent.

With these specifications, EU legislators seek to prevent gatekeepers from steering users to consent using so-called dark patterns. These are manipulative techniques, such as deceptive button contrast, to push users in directions that are in the firms’ best interest (OECD, 2022).

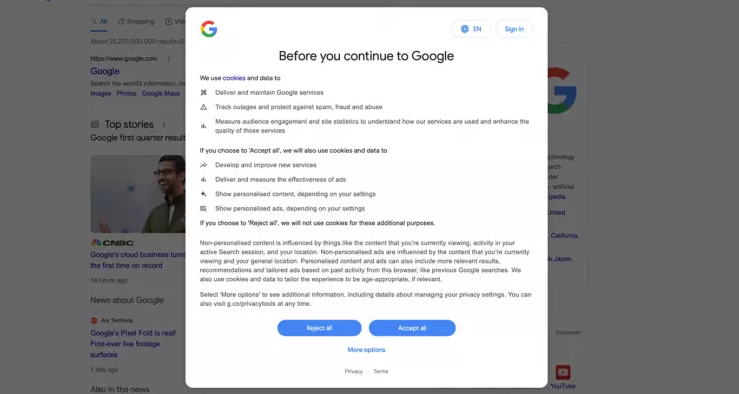

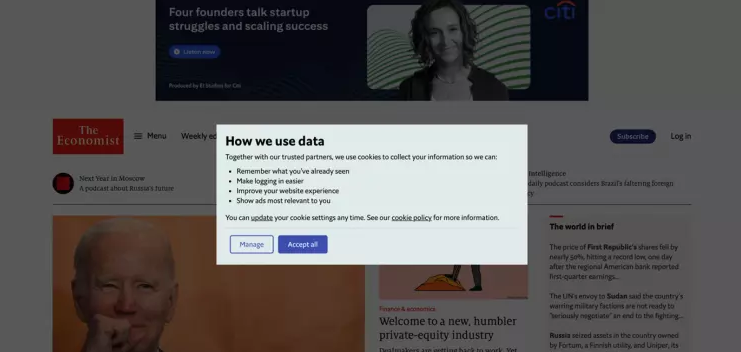

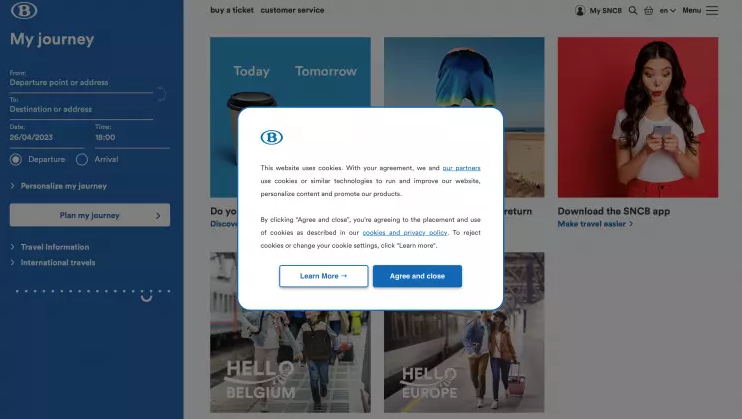

Dark patterns are common and take various forms in the digital economy. Firms of all sizes use them, including large platforms (Forbrukerrådet, 2018). The most notorious example is when users are asked to consent to cookies, which allow websites to track users’ online activities. Studies have shown that firms make it easier for users to accept consent than to decline by, for instance, not offering a reject button in the first layer (Nouwens et al 2020).

Figure 1. An example of a consent banner that offers both an ‘accept all’ and ‘reject all’ button (accessed 26 April 2023)

Figure 2. An example of a consent banner that offers only an ‘accept all’ button. This might be considered a dark pattern (accessed 26 April 2023)

The failure to ensure effective consumer consent

The GDPR has been in force since 2018, but many data protection authorities have arguably been slow to use it to ensure effective consumer consent by tackling dark patterns. For example, France’s data protection authority, the Commission Nationale Information et Libertés (CNIL), published guidance in 2020 on cookie banners (CNIL, 2020). CNIL issued several formal notices to firms about cookie banners only in 2021 (CNIL, 2021).

It named and shamed some of them, including Google and Facebook, in 2022, for not allowing users to decline cookies as easily as accepting them (CNIL, 2022)2. Moreover, it was only in January 2023 that data protection authorities at EU level provided guidance on how firms can seek consent via cookie banners that avoid dark patterns (EDPB, 2023). While enforcement is improving, cookie banners with dark patterns are still ubiquitous.

Even with these enforcement efforts, studies show that some websites drop cookies before getting consent or even if the user does not consent, despite obligations in both the ePrivacy Directive, which governs cookies, and the GDPR, that firms obtain consent before collecting personal data via cookies, because consent would otherwise be useless3. In France, CNIL fined the newspaper Le Figaro for these practices in 2021 (CNIL, 2021)4.

Meanwhile, some websites, such as French cinema site allocine.fr, ask users either to consent to cookies or pay a fee to access the website through a paywall (so-called ‘cookie walls’). CNIL considers this paywall as GDPR-compliant under certain conditions (CNIL, 2022).

However, the authority ignores economic studies that suggest that users prefer a zero-price product over a paid-for one when they have a choice, even if the zero-price product is of lower quality (Shampan’er and Ariely, 2006). Even if users pay, some websites require them to create an account, enabling collection of user data as if the user had consented to the use of cookies.

There is also a growing recognition that users frequently encounter cookie banners when browsing online, leading to ‘cookie fatigue’ or ‘consent fatigue’. This leads users to ignore banners and automatically consent or decline with a feeling of resignation (Kulyk et al 2020).

The United Kingdom’s data protection authority, under the UK G7 presidency in 2021, called for G7 countries to overhaul cookie banners5. The European Commission might soon propose an initiative to end cookie fatigue in Europe6.

Risks that the DMA might not ensure effective consumer consent

Because of the DMA provisions on consent, gatekeepers will have to request consent more often, in stark contrast with the desire to reduce the number of consent banners and to end consent fatigue.

Moreover, the DMA obligations will increase the amount of information users must deal with, as gatekeepers must provide unambiguous information for each practice targeted by the DMA.

This could lead to information overload when users face too much information, to the point that the information becomes useless and meaningless for making effective choices (Thaler and Sunstein, 2021). Reading privacy policies is very time-consuming (McDonald and Cranor, 2008) and thus users accept the terms without understanding and reading them (OECD, 2018).

Therefore, even if the DMA prohibits dark patterns and requires gatekeepers to request consent only once a year, the increase in consent banners and information might prevent users from consenting effectively.

Recommendations to ensure effective consumer consent under the DMA

The European Commission cannot tell gatekeepers how to comply with the DMA obligation (Articles 8 and 13 DMA). However, it can monitor and enforce compliance (Articles 26 and 29 DMA).

In particular, the Commission can request that gatekeepers show in their annual compliance reports that their compliance measures are effective (Articles 8, 11 and 46 DMA). On the DMA obligation of effective user consent, the Commission should request gatekeepers to provide an independent behavioural study that examines:

-The design of each consent banner, to check that it is free of dark patterns, and

-User choices when gatekeepers present a choice between consenting in exchange for accessing a personalised service, and not consenting in exchange for accessing an equivalent service. The study should detail how gatekeepers provide equivalent, less-personalised services. Some gatekeepers could request a fee from users to access additional services without consent for personal data processing, something the DMA does not prevent. However, this practice would likely to raise similar concerns to cookie walls, as it might undermine the concept of consent given freely, considering that users might not have real and satisfactory alternatives if they decline to consent. Gatekeepers might also increase the attention cost of using a service by, for instance, displaying more sponsored content or advertising in a newsfeed to users that do not consent. Gatekeepers might then argue that the lower quality of the service is a direct consequence of the inability to process data, as advertising or sponsored content is necessary to run the service for free. Whether these practices will be found DMA-compliant and provide ways to circumvent the obligations will depend on what constitutes an equivalent service with the same quality, and how gatekeepers offer them. Another challenging issue for the Commission is that users might not even realise they are receiving a lower quality of service if they do not consent.

Finally, the Commission should work with the high-level group composed of the European competition, data protection, consumer protection, telecommunication and audiovisual media authorities (Article 40 DMA). In line with its remit, this group should advise the Commission on how to deal with dark patterns, consent fatigue and information overload.

In particular, the group with the Commission should carry out a behavioural study on how users perceive and interact with consent banners, separately and collectively, to monitor consent fatigue and information overload.

The group should also inform the Commission about how the DMA interacts with different legal regimes, including, but not limited to, data protection and consumer protection laws, and how to ensure a consistent approach between these legal regimes.

Endnotes

1. See GDPR Article 6.

2. See CNIL statement of 6 January 2022.

3. Kate Kaye, ‘Ad Trackers Continue to Collect Europeans’ Data Without Consent Under the GDPR, Say Ad Data Detectives’, Digiday, 4 October 2021.

4. See CNIL statement of 29 July 2021 (in French).

5. See ICO statement of 7 September 2021.

6. Luca Bertuzzi, ‘Cookie Fatigue: The Questions Facing the EU Commission Initiative’, Euractiv, 6 April 2023.

References

Cabral, L, J Haucap, G Parker, G Petropoulos, T Valletti and M Van Alstyne (2021) The EU Digital Markets Act, A Report from a Panel of Economic Experts, European Commission Joint Research Centre, Luxembourg: Publications Office of the European Union.

Carugati, C (2023) ‘The Difficulty of Designating Gatekeepers Under the EU Digital Markets Act’, Bruegel Blog, 20 February.

CNIL (2022) ‘Cookie walls: la CNIL publie des premiers critères d’évaluation’, 16 May, Commission Nationale de l’Informatique et des Libertés.

CNIL (2021) ‘Refuser les Cookies doit Être Aussi Simple Qu’accepter: La CNIL Poursuit son Action et Adresse de Nouvelles Mises en Demeure’, 14 December, Commission Nationale Information et Libertés.

CNIL (2020) ‘Délibération N° 2020-092 du 17 Septembre 2020 Portant Adoption d’une Recommandation Proposant des Modalités Pratiques de Mise En Conformité en Cas de Recours aux « Cookies et Autres Traceurs »’, 17 September, Commission Nationale Information et Libertés.

EDPB (2023) Report of the Work Undertaken by the Cookie Banner Taskforce, European Data Protection Board.

Forbrukerrådet (2018) Deceived By Design, How Tech Companies Use Dark Patterns to Discourage us from Exercising our Rights to Privacy.

Kulyk, O, N Gerber, A Hilt and M Volkamer (2020) ‘Has the GDPR Hype Affected Users’ Reaction to Cookie Disclaimers?’ Journal of Cybersecurity 6(1).

McDonald, AM and LF Cranor (2008) ‘The Cost of Reading Privacy Policies’, I/S: A Journal of Law and Policy for the Information Society 4(3): 543-568.

Nouwens, M, I Liccardi, M Veale, D Karger and L Kagal (2020) ‘Dark Patterns After the GDPR: Scraping Consent Pop-Ups and Demonstrating their Influence’, Paper 194, CHI Conference on Human Factors in Computing Systems, 25-20 April, 2020, Honolulu.

OECD (2022) Dark Commercial Patterns, OECD Digital Economy Papers No. 336, Organisation for Economic Co-operation and Development.

OECD (2018) Quality Considerations in Digital Zero-Price Markets, Background Note by the Secretariat DAF/COMP(2018)14, Organisation for Economic Co-operation and Development.

Shampan’er, K and D Ariely (2006) ‘How Small is Zero Price? The True Value of Free Products’, Research Department Working Papers No. 06–16, Federal Reserve Bank of Boston.

Thaler, RH and CR Sunstein (2021) Nudge: The Final Edition, Penguin.

This article was originally published on Bruegel.